Applies To: Windows Server 2016, Windows Server 2012 R2, Windows Server 2012

This topic describes how to deploy Storage Spaces on a stand-alone

Windows Server 2012−based server. For information about how to create a

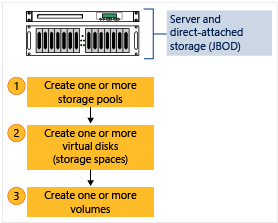

clustered storage space, see Deploy a Storage Spaces cluster on Windows Server 2012 R2.To create a storage space, you must first create one or more storage pools. A storage pool is a collection of physical disks. A storage pool enables storage aggregation, elastic capacity expansion, and delegated administration.

From a storage pool, you can create one or more virtual disks. These virtual disks are also referred to as storage spaces. A storage space appears to the Windows operating system as a regular disk from which you can create formatted volumes. When you create a virtual disk through the File and Storage Services user interface, you can configure the resiliency type (simple, mirror, or parity), the provisioning type (thin or fixed), and the size. Through Windows PowerShell, you can set additional parameters such as the number of columns, the interleave value, and which physical disks in the pool to use. For information about these additional parameters, see New-VirtualDisk and What are columns and how does Storage Spaces decide how many to use? in Storage Spaces Frequently Asked Questions (FAQ).

Note

You cannot use a storage space to host the Windows operating system.

From a virtual disk, you can create one or more volumes. When you

create a volume, you can configure the size, the drive letter or folder,

the file system (NTFS file system or Resilient File System (ReFS)), the

allocation unit size, and an optional volume label.You cannot use a storage space to host the Windows operating system.

The following figure illustrates the Storage Spaces workflow.

Figure 1: Storage Spaces workflow

In this topic

Note

This topic includes sample Windows PowerShell cmdlets that you can use to automate some of the procedures described. For more information, see Using Cmdlets.

This topic includes sample Windows PowerShell cmdlets that you can use to automate some of the procedures described. For more information, see Using Cmdlets.

Prerequisites

To use Storage Spaces on a stand-alone Windows Server 2012−based server, make sure that the physical disks that you want to use meet the following prerequisites.

Important

If you want to deploy Storage Spaces on a failover cluster, see the topic Deploy a Storage Spaces cluster on Windows Server 2012 R2. Realize that a failover cluster deployment has different prerequisites, such as the supported disk bus types, the supported resiliency types, and the minimum number of disks that are required.

If you want to deploy Storage Spaces on a failover cluster, see the topic Deploy a Storage Spaces cluster on Windows Server 2012 R2. Realize that a failover cluster deployment has different prerequisites, such as the supported disk bus types, the supported resiliency types, and the minimum number of disks that are required.

| Area | Requirement |

|---|---|

| Disk bus types | Serial Attached SCSI (SAS) Serial Advanced Technology Attachment (SATA) Note: You can also use USB drives. However, we do not recommend that you use USB drives in a server environment. Note: Storage Spaces does not support iSCSI and Fibre Channel controllers. |

| Disk configuration | Physical disks must be at least 4 GB. Disks must be blank and not formatted. Do not create volumes. |

| HBA considerations | We recommend that you use simple host bus adapters (HBAs) that do not support RAID functionality. If RAID capable, HBAs must be in non-RAID mode with all RAID functionality disabled. Adapters must not abstract the physical disks, cache data, or obscure any attached devices. This includes enclosure services that are provided by attached just-a-bunch-of-disks (JBOD) devices. Storage Spaces is compatible only with HBAs where you can completely disable all RAID functionality. |

| JBOD enclosures | A JBOD enclosure is optional. For full Storage Spaces functionality

if you are using a JBOD enclosure, verify with your storage vendor that

the JBOD enclosure supports Storage Spaces. To determine whether the JBOD enclosure supports enclosure and slot identification, run the following Windows PowerShell cmdlet: Get-PhysicalDisk | ? {$_.BusType –eq “SAS”} | fcIf the EnclosureNumber and SlotNumber fields contain values, this indicates that the enclosure supports these features. |

| Resiliency Type | Disk Requirements | When To Use |

|---|---|---|

| Simple Stripes data across physical disks. Maximizes disk capacity and increases throughput. Does not provide resiliency. |

Requires at least one physical disk. Warning: A simple space does not protect from disk failure. |

Do not use to host irreplaceable data. Use to host temporary or easily recreated data at a reduced cost. Suited for high performance workloads where resiliency is not required or is provided by the application. |

| Mirror Stores two or three copies of the data across the set of physical disks. Increases reliability, but reduces capacity. Duplication occurs with every write. A mirror space also stripes the data across multiple physical drives. Greater data throughput than parity, and lower access latency. Uses dirty region tracking (DRT) to track modifications to the disks in the pool. When the system resumes from an unplanned shutdown and the spaces are brought back online, DRT makes disks in the pool consistent with each other. |

Requires at least two physical disks to protect from single disk failure. Requires at least five physical disks to protect from two simultaneous disk failures. |

Use for most deployments. For example, mirror spaces are suited for a general-purpose file share or a virtual hard disk (VHD) library. |

| Parity Stripes data and parity information across physical disks. Increases reliability when it is compared to a simple space, but somewhat reduces capacity. Increases resiliency through journaling. This helps prevent data corruption if an unplanned shutdown occurs. |

Requires at least three physical disks to protect from single disk failure. | Use for workloads that are highly sequential, such as archive or backup. |

Step 1: Create a storage pool

You must first group available physical disks into one or more storage pools.To create a storage pool

- In the Server Manager navigation pane, click File and Storage Services.

- In the navigation pane, click the Storage Pools page.

By default, available disks are included in a pool that is named the primordial pool. If no primordial pool is listed under STORAGE POOLS, this indicates that the storage does not meet the requirements for Storage Spaces. Make sure that the disks meet the requirements that are outlined in the Prerequisites section.

Tip

If you select the Primordial storage pool, the available physical disks are listed under PHYSICAL DISKS.

- Under STORAGE POOLS, click the TASKS list, and then click New Storage Pool.

The New Storage Pool Wizard opens.

- On the Before you begin page, click Next.

- On the Specify a storage pool name and subsystem

page, enter a name and optional description for the storage pool,

select the group of available physical disks that you want to use, and

then click Next.

- On the Select physical disks for the storage pool page, do the following, and then click Next:

- Select the check box next to each physical disk that you want to include in the storage pool.

- If you want to designate one or more disks as hot spares, under Allocation, click the drop-down arrow, and then click Hot Spare.

- Select the check box next to each physical disk that you want to include in the storage pool.

- On the Confirm selections page, verify that the settings are correct, and then click Create.

- On the View results page, verify that all tasks completed, and then click Close.

Note

Optionally, to continue directly to the next step, you can select the Create a virtual disk when this wizard closes check box.

- Under STORAGE POOLS, verify that the new storage pool is listed.

Windows PowerShell equivalent commands

Windows PowerShell equivalent commandsThe following Windows PowerShell cmdlet or cmdlets perform the same function as the preceding procedure. Enter each cmdlet on a single line, even though they may appear word-wrapped across several lines here because of formatting constraints.

The following example shows which physical disks are available in the primordial pool.

Get-StoragePool -IsPrimordial $true | Get-PhysicalDisk | Where-Object CanPool -eq $True

New-StoragePool –FriendlyName StoragePool1 –StorageSubsystemFriendlyName “Storage Spaces*” –PhysicalDisks (Get-PhysicalDisk –CanPool $True)

New-StoragePool –FriendlyName StoragePool1 –StorageSubsystemFriendlyName “Storage Spaces*” –PhysicalDisks (Get-PhysicalDisk PhysicalDisk1, PhysicalDisk2, PhysicalDisk3, PhysicalDisk4)

$PDToAdd = Get-PhysicalDisk –FriendlyName PhysicalDisk5

Add-PhysicalDisk –StoragePoolFriendlyName StoragePool1 –PhysicalDisks $PDToAdd –Usage HotSpare

Step 2: Create a virtual disk

Next, you must create one or more virtual disks from the storage pool. When you create a virtual disk, you can select how the data is laid out across the physical disks. This affects both reliability and performance. You can also select whether to create thin- or fixed-provisioned disks.To create a virtual disk

- If the New Virtual Disk Wizard is not already open, on the Storage Pools page in Server Manager, under STORAGE POOLS, make sure that the desired storage pool is selected.

- Under VIRTUAL DISKS, click the TASKS list, and then click New Virtual Disk.

The New Virtual Disk Wizard opens.

- On the Before you begin page, click Next.

- On the Select the storage pool page, click the desired storage pool, and then click Next.

- On the Specify the virtual disk name page, enter a name and optional description, and then click Next.

- On the Select the storage layout page, click the desired layout, and then click Next.

Note

If you select a layout where you do not have enough physical disks, you will receive an error message when you click Next. For information about which layout to use and the disk requirements, see the Prerequisites section of this topic.

- If you selected Mirror as the storage layout, and you have five or more disks in the pool, the Configure the resiliency settings page appears. Select one of the following options:

- Two-way mirror

- Three-way mirror

- Two-way mirror

- On the Specify the provisioning type page, click one of the following options, and then click Next.

- Thin

With thin provisioning, space is allocated on an as-needed basis. This optimizes the usage of available storage. However, because this enables you to over-allocate storage, you must carefully monitor how much disk space is available.

- Fixed

With fixed provisioning, the storage capacity is allocated immediately, at the time a virtual disk is created. Therefore, fixed provisioning uses space from the storage pool that is equal to the virtual disk size.

Tip

With Storage Spaces, you can create both thin- and fixed-provisioned virtual disks in the same storage pool. For example, you could use a thin-provisioned virtual disk to host a database and a fixed-provisioned virtual disk to host the associated log files.

- Thin

- On the Specify the size of the virtual disk page, do the following:

If you selected thin provisioning in the previous step, in the Virtual disk size box, enter a virtual disk size, select the units (MB, GB, or TB), and then click Next.

If you selected fixed provisioning in the previous step, click one of the following:

- Specify size

To specify a size, enter a value in the Virtual disk size box, and then select the units (MB, GB, or TB).

If you use a storage layout other than simple, the virtual disk uses more free space than the size that you specify. To avoid a potential error where the size of the volume exceeds the storage pool free space, you can select the Create the largest virtual disk possible, up to the specified size check box.

- Maximum size

Select this option to create a virtual disk that uses the maximum capacity of the storage pool.

- Specify size

- On the Confirm selections page, verify that the settings are correct, and then click Create.

- On the View results page, verify that all tasks completed, and then click Close.

Tip

By default, the Create a volume when this wizard closes check box is selected. This takes you directly to the next step.

Windows PowerShell equivalent commands

Windows PowerShell equivalent commandsThe following Windows PowerShell cmdlet or cmdlets perform the same function as the preceding procedure. Enter each cmdlet on a single line, even though they may appear word-wrapped across several lines here because of formatting constraints.

The following example creates a 50 GB virtual disk that is named VirtualDisk1 on a storage pool that is named StoragePool1.

New-VirtualDisk –StoragePoolFriendlyName StoragePool1 –FriendlyName VirtualDisk1 –Size (50GB)

New-VirtualDisk –StoragePoolFriendlyName StoragePool1 –FriendlyName VirtualDisk1 –ResiliencySettingName Mirror –UseMaximumSize

New-VirtualDisk –StoragePoolFriendlyName StoragePool1 –FriendlyName VirtualDisk1 –Size (50GB) –ProvisioningType Thin

Note

You must have at least five physical disks in the storage pool for this cmdlet to work. (This does not include any disks that are allocated as hot spares.)

You must have at least five physical disks in the storage pool for this cmdlet to work. (This does not include any disks that are allocated as hot spares.)

New-VirtualDisk -StoragePoolFriendlyName StoragePool1 -FriendlyName VirtualDisk1 -ResiliencySettingName Mirror -NumberOfDataCopies 3 -Size 20GB -ProvisioningType Fixed

Step 3: Create a volume

Next, you must create a volume from the virtual disk. You can assign an optional drive letter or folder, and then format the volume with a file system.To create a volume

- If the New Volume Wizard is not already open, on the Storage Pools page in Server Manager, under VIRTUAL DISKS, right-click the desired virtual disk, and then click New Volume.

The New Volume Wizard opens.

- On the Before you begin page, click Next.

- On the Select the server and disk page, do the following, and then click Next.

- In the Server area, click the server on which you want to provision the volume.

- In the Disk area, click the virtual disk on which you want to create the volume.

- In the Server area, click the server on which you want to provision the volume.

- On the Specify the size of the volume page, enter a volume size, specify the units (MB, GB, or TB), and then click Next.

- On the Assign to a drive letter or folder page, configure the desired option, and then click Next.

- On the Select file system settings page, do the following, and then click Next.

- In the File system list, click NTFS or ReFS.

- In the Allocation unit size list, either leave the setting at Default or set the allocation unit size.

Note

For more information about allocation unit size, see Default cluster size for NTFS, FAT, and exFAT.

- Optionally, in the Volume label box, enter a volume label name, for example HR Data.

- In the File system list, click NTFS or ReFS.

- On the Confirm selections page, verify that the settings are correct, and then click Create.

- On the View results page, verify that all tasks completed, and then click Close.

- To verify that the volume was created, in Server Manager, click the Volumes page.

The volume is listed under the server where it was created. You can also verify that the volume is in Windows Explorer.

Windows PowerShell equivalent commands

Windows PowerShell equivalent commandsThe following Windows PowerShell cmdlet or cmdlets perform the same function as the previous procedure. Enter the command on a single line.

The following example initializes the disks for virtual disk VirtualDisk1, creates a partition with an assigned drive letter, and then formats the volume with the default NTFS file system.

Get-VirtualDisk –FriendlyName VirtualDisk1 | Get-Disk | Initialize-Disk –Passthru | New-Partition –AssignDriveLetter –UseMaximumSize | Format-Volume